|

Technical Papers |

|

This paper summarizes a talk given at “Lisp50@OOPSLA,” the 50th Anniversary of Lisp workshop, Monday, October 20, 2008, an event co-located with the OOPSLA’08 in Nashville, TN, in which I offered my personal, subjective account of how I came to be involved with Common Lisp and the Common Lisp standard, and of what I learned from the process.

The account highlights the role of luck in the way various details of history played out, emphasizing the importance of seizing and making the best of the chance opportunities that life presents. The account further underscores the importance of understanding the role of controlling influences such as funding and intellectual property in shaping processes and outcomes. As noted by Louis Pasteur, “chance favors the prepared mind.”

The talk was presented extemporaneously from notes. As such, it covered the same general material as does this paper, although the two may differ in details of structure and content. It is suggested that the talk be viewed as an invitation to read this written text, and that the written account be deemed my position of record on all matters covered in the talk.

D.3.0 [Programming Languages]: General - Standards.

Design, Documentation, Economics, Experimentation, Human Factors, Languages, Legal Aspects, Management, Standardization.

Common Lisp, ISLISP, ANSI, ISO, History, Politics, Funding, Copyright, Intellectual Property.

Copyright © 2008 by Kent M. Pitman. All Rights Reserved.

The content herein comprises my personal impressions and recollections based primarily on direct observation. Since this account was not created contemporaneously, and since memories sometimes grow hazy, it may sometimes deviate from literal truth.

This is a story about personal history and growth. It might on occasion appear to portray certain individuals—primarily myself, but perhaps others—in an unflattering light. Part of the point of this paper is that we have each grown through our respective experiences. There is no intent to disparage anyone.

Because this is a personal story, I have chosen to present it in the first person in order to emphasize its nature not as a scholarly work but rather as a personal account, relying primarily on personal knowledge, belief, and recollection. I’ve attempted to give an accurate account, but it’s nevertheless possible that some statements could be in error; since I can’t say which, I can only call your attention to the possibility that it could happen and ask you to accept the material offered here according to its nature. Much of the point of what I have to say is about lessons I have taken away from this experience, and I hope the value of that will transcend any errors in specific detail.

Someone once claimed to me that the problems with standards work is that the process is so exhausting that no one who ever finishes the task wants to try another, so everyone involved is always brand new and no knowledge ever propagates from cycle to cycle. This isn’t literally true, but there is still some truth to it. It’s clear that many of the people involved in standards work are not professionally prepared for the experience, and so there is a lot of confusion and on-the-job training early in the process. This account by me here may therefore be regarded as my attempt to share my experiences so that perhaps others seeking to participate in future standards efforts may start out a little ahead of the game.

I begin in the style of Michener, by offering some background that will help me to make certain later points. The general point to be made is that a great deal of what goes into a standard, and perhaps into any bit of historical narrative, amounts to chance, felicity, or luck. That is, while at some level of abstraction, it may seem that two people of equivalent training might be interchangeable for a particular role, there will necessarily turn out to be particular incidents for which a particular individual is either more or less well-prepared based simply on who they are or what their individual experiences have been. Sometimes, as I think you’ll see here, those details can affect how things play out in surprising ways.

The Artificial Intelligence Laboratory and the Laboratory for Computer Science at MIT were closely coupled, and I came to them somewhat by chance, dragged along by someone I’d met elsewhere on campus—another chance event. It was common in those days for guest, or “tourist,” users to sit at others’ computers in the middle of the night in order that precious machine cycles did not go to waste. I quickly moved from being a tourist user to being a regular user by taking advantage of the Undergraduate Research Opportunities Program (UROP) to become part of the team that was developing MACSYMA, a large program capable of performing symbolic algebra. As part of the MACSYMA group, I began work on an undergraduate thesis project, which was to write a FORTRAN-to-LISP translator for use in translating the IMSL FORTRAN library, making it available for MACSYMA.

One day while working with the MACSYMA group, I was around when someone was assigning offices—or desks, really, since offices were generally shared. “Where is mine?” I asked, somewhat jokingly. As far as I knew, only graduate students were entitled to offices. The person doing the arranging didn’t realize I was joking and responded in seriousness that he didn’t know and would try to find one for me. Of course, I immediately went to the several other undergraduates working on the same project and said, “they don’t know we’re not supposed to have offices.” But soon we all did have offices, or at least desks in offices with dedicated VT52 terminals atop them. My office was shared with Guy Steele, co-inventor of the SCHEME programming language, and JonL White, author and maintainer of the MACLISP compiler.

I spent nearly all of my hours at the Lab, often to the detriment of my class work, which is why I took 5 years to graduate. During my time there, I worked mostly for credit, only very occasionally for tiny amounts of money—about $5 per hour for a small number of hours a week programming MACSYMA, as I recall. Most of my time was as a volunteer, and in that time I did some additional work on MACSYMA but also some personal projects.

During all of this, I picked up a lot of trivia about the MACLISP language, which was not very well-documented. David Moon had written a manual a few years earlier, but it was out of date and featured primarily the Honeywell Multics implementation of MACLISP, not the PDP10 implementation. Also, as it happened, Don Knuth had just come out with TEX typesetting language and I was looking for possible uses for it, so I attempted to set up some formats for writing a manual. When that produced something pleasant to look at, it occurred to me that I should just make a whole manual about MACLISP.

The original design of the Common Lisp language, culminating in the 1984 publication of Common Lisp: The Language was designed not by an ANSI committee but just by a set of interested individuals. I was not a founding member of the group, although my officemate Guy Steele was. I was vaguely aware that there was some sort of thing afoot, but my specific involvement came slightly later through the same kind of accidental path that had led me to Lisp itself.

Although I was not directly involved in how funding arrived to our group, I was vaguely aware that ARPA was very interested in being able to connect up the programs that resulted from research it had funded at various universities and research labs. Because many of these facilities used different dialects of LISP, ARPA was inclined to try to get them all to use the same dialect. They were leaning toward concluding that INTERLISP was the dialect of choice because it seemed to be deployed at more locations than any other single dialect, but a case was made that many MACLISP variants were really the same dialect and could be collected under a single Common Lisp banner.

In part, this was an issue of simple territorialism. The MIT crowd would have preferred to use a dialect of Lisp more similar to the MACLISP dialect it had been using. But at another level, there was perceived to be a serious technical issue: INTERLISP was perceived as a very complex design, including a very controversial facility called DWIM, that many felt would not be a suitable base for the kind of system programming that MACLISP programmers were used to doing.

In effect, there was a fight to the death between Common Lisp and INTERLISP because ARPA was not willing to fund work in both dialects going forward. And after Common Lisp: The Language was published, Common Lisp succeeded and INTERLISP largely disappeared within a small number of years.

This was unfortunate, of course, because although the nascent Common Lisp community really didn’t desire to destroy all of that investment in INTERLISP, they did simply want to survive. The INTERLISP community was renowned for its user interfaces, for example.

Someone once observed to me, however, that the cost of any such battle is that later the individuals who have lost out or otherwise been alienated will eventually need to be repatriated with the community. At that point, some compromise is often needed in order to bring them back

Change was happening to MACLISP very rapidly in the late 1970’s and early 1980’s. It was common for MACLISP users to read their mail only to find that some critical semantics in the language had changed and that it was time to update programs they had already written to accommodate the new meaning.

Jokes were made about the frequent and extreme nature of the changes. An extreme example illustrates the style, where ITS was the operating system MACLISP was developed under and TOPS-10 was a competing operating system:

As of next Thursday, ITS will be flushed in favor of TOPS-10.

Please update your programs.

The pace of change was quite exciting for those doing research on programming languages, but it was far less good to those trying to build programs that used the programming language as a stable commodity. Commercial development required more.

The push for a standard, therefore, was intended to create better stability of programming language semantics. In particular, standardization was not intended to stop research and development of languages, but rather to give commercial developers shelter from the pace of rapid change that was expected to continue.

During the creation of the original Common Lisp standard, there was only very limited communication with Europe and Asia. Mail delivery was often delivered via a protocol called uucp (Unix to Unix copy) which required mail to be explicitly routed by hops from one machine to another. Mail was not continuously delivered but was instead queued for periodic batch delivery. Unfortunately, this meant it could take 2-3 days to get a message across the ocean and to receive a reply back. A consequence of this was that there were practical difficulties including Europe and Asia in design discussions about Lisp; the turnaround time was just too slow.

It seemed to me, at least, that the creation of the Eulisp effort in Europe was pretty much a direct reaction to having been excluded from the American discussions of Common Lisp. It was my impression that a number of individuals took the exclusion quite personally. I often wonder if this particular dialect ever would have happened had we made a stronger effort to keep Europe involved in early Common Lisp standardization efforts.

It happened in 1982 that I needed a ride to the AAAI conference, which was to be held in Pittsburgh. Glenn Burke had planned to drive and offered me a ride assuming it was ok with me that he stayed an extra day to attend a Common Lisp design meeting. “Oh,” I asked, “Is that something I could go to?” He got me info on the items being voted on and told me to study up. I showed up well-informed and was allowed to vote. That’s how I became involved in the design of Common Lisp.

There were a few more in-person meetings, all of which I believe I attended, although most of the design was done by email. Much later, in 1993, the specific online design processes we used were later studied and written up by researchers at the MIT Sloan School [Yates93].

When the manual was done and it was time to publish it, I was asked to sign over the copyright to MIT as part of the publication process. This seemed wrong to me, since I had done the work myself without anyone paying me, but I wasn’t sure what option I had, so I eventually just said ok. The manual sold two press runs and I computed later that MIT had made about $17,000 in net profit (the equivalent of my first year salary after graduating). I saw none of that money—all because I didn’t realize I should say, “Wait a minute. You didn’t pay for it and I won’t sign it over.”

Around this time, the Symbolics Lisp Machine system came out with an object-oriented paradigm for error handling. Called the “New Error System” (or “NES”), it was described in a document titled Signaling and Handling Conditions. [Moon83]

I was amused to hear one Lisp Machine user say “Finally, a second use for Flavors.” Flavors was an object-oriented paradigm that became a strong influence on the design of CLOS. Some users were having difficulty figuring out what they were intended to do with such a system. Since almost all examples of its use involved customizing the window system, many users had come to believe, mistakenly, that Flavors was just a window system customization language. They had overlooked its general-purpose nature. Having a condition system based on Flavors meant that there were suddenly many examples of using Flavors for reasons other than extending the window system. As such, an unexpected positive effect of the introduction of NES was to finally break certain people of that confusion.

Even as it sought to culturally unify the MACLISP family of dialects, the emerging language necessarily contained numerous incompatible changes that required some getting used to.

Probably the most sweeping change was the introduction of a claim that the semantics of the language would apply equally whether the language was used interpreted or compiled.

MACLISP, for example, had different variable binding semantics for interpreted and compiled code. This created some strange points of flexibility where certain useful code libraries were distributed with remarks saying “must always be run interpreted” or “must always be run compiled.” However, most of the time the difference between interpreted and compiled code in MACLISP was an annoyance and an opportunity for bugs to creep in. So MACLISP used dynamic scoping in interpreted code, while in compiled code it used scoping that was sometimes called lexical scoping but was really just a way to tell the compiler not to compile using dynamic scoping. MACLISP did not create lexical closures in places where Common Lisp users would expect them.

Common Lisp was also base 10 rather than base 8. A surprising amount of noise was made about this seemingly innocuous change. Arguments were made by people used to doing system programming that base 8, being a power of 2, was more natural. (Interestingly, it was almost never suggested that hexadecimal was an appropriate base, which was probably due to the 36-bit word size used by the PDP-10, which was customarily divided into four 9-bit bytes, five 7-bit bytes, or six 6-bit bytes.) Not surprisingly, however, the general public found great appeal in use of decimal as a default radix.

In 1984, Common Lisp: The Language (or CLTL) [Steele84] was published.

There were a number of problems with CLTL.

One problem was that Common Lisp was more descriptive than prescriptive. That is, if two implementation communities disagreed about how to solve a certain problem, CLTL was written in a way that sought to build a descriptive bridge between the two dialects in many cases rather than to force a choice that would bring the two into actual compatibility. This may even have been a correct strategy since it was most important in the early days just to get buy-in from the community on the general approach. The notion that it mattered for two implementations to agree was at that point a mostly abstract concern. There were not a lot of programs moving from implementation to implementation yet. As the user base later grew and program porting became a more widespread practice, the community will to invest in such matters grew. But at the time when CLTL was published, a sense that the language design must focus on true portability had not yet evolved.

In this general time period, I submitted a paper to a conference discussing portability problems and the issue of blame attribution. I noted that the support for portability in implementations had a directional character. Some implementations were what I called “inward portable” and later came to think of as “tolerant,” preferring to be very accepting of many different interpretations of the language description. Such implementations made good targets of porting efforts but were not very good development platforms. An “outward portable” implementation, which I would later come to call simply “strict” would be a good platform for development because it would catch porting problems early during development and make it more likely that programs would port.

The problem with blame attribution was that people tended to assume that if a program had previously run in an apparently conforming implementation and then it failed to run in another, they thought that it was the second implementation that was at fault. In fact, if you had debugged your program in a tolerant implementation and then tried to move to a strict implementation, that might not be the case at all. The matter was far more subjective, and it took a while for communities to understand how to properly attribute blame when problems resulted in porting a program.

My paper on portability was rejected for reasons of being not adequately formal. The reviewers wanted more hard numbers. It seemed to me at the time that the reviewers had a too-narrow view of the kinds of value a paper might have. Had I had a magazine column or a blog site, it would have been a good topic for that, but there were none such back then. One of the positive effects of the modern publishing world is that one can publish first and decide later, lazily, about whether there is lasting merit to the thought. In the case of my paper, I think it was just detecting what would come to be seen as obvious shortly thereafter—that portability was of evolving importance and that these issues of style and usage patterns in both the writing of programs and the designing of implementations really did matter. The issue of strict vs. tolerant was increasingly in the air at the time, and soon enough would be something the user base had confronted directly.

The distinction between user guides and reference guides was also still evolving. Steele chose to make CLTL both a user guide and a reference. The community generally liked the presentation, and even today many people prefer to use CLTL as a reference, even knowing it’s not true to the language, just because they like the presentation style. But it was hard to look things up because some useful information was misplaced and other things were never really explained. For example, we learn in CLTL that the evaluation order of subforms is strictly left-to-right. This information occurs in three places: in the description of SETF, the description of DEFSETF, and the description of floating point contagion. Each of these places tells us that the facility in question preserves the normal left-to-right order of evaluation. But one’s sense is that none is intended as a primary reference; that is, it looks like any primary reference was omitted.

In addition to all these subjective and presentational issues, CLTL also had a few much more fundamental weaknesses, most acutely the absence of an error system. As I was fond of expressing it at that time: A majority of the functions described by CLTL were capable of signaling errors under at least some conditions, not all of which were things a program could test for in advance, and yet if an error was signaled, there was no way to catch that error under program control. That meant that no serious program could operate in a portable way and expect to avoid trouble. There were other technical gaps, too, but this was severe. In retrospect, had CLTL offered even just MACLISP’s ERRSET it would have been a great deal better off.

In spite of some substantial shortcomings, members of the Lisp community did rally behind Common Lisp as the language of choice for moving forward. Following the publication of CLTL, the Common Lisp language was soon widely heralded as a “de facto standard.”

Vendors tried to gain commercial traction and users tried to build applications. After about two years, though, there was feedback based on actual use that needed some kind of outlet, so a meeting was arranged in Monterey, California to talk about what would come next.

Steele arrived at the Monterey meeting carrying a short list (a page or two) of “obvious” things that needed to be changed in CLTL. It was quickly obvious that these changes would not be made, in part because nothing that seemed obvious really was.

I recall that one of these was a recommendation to make an XOR operator in the spirit of AND and OR. However, there wasn’t uniform agreement on that one because people couldn’t agree whether XOR should be a function or a macro. Because it could not be correctly evaluated without knowing the values of all of its arguments, there was no reason for the short-circuit evaluation that AND and OR enjoyed; theoretically, it could be useful to APPLY it. But some people thought it should be a special form anyway so that it matched AND and OR.

One serious problem was that a lot of new people had shown up—the users. And there was no plan for how to incorporate their votes. Some of us (myself included) thought the users should not have a vote because it was not their language. It was alleged to me that the users’ investment was in using the language. That was a new concept for me and I did not immediately resonate to it, although I have come to see this as a legitimate point of view.

But even if that were resolved, there were questions about whether to measure votes by organization or individually, etc. Rather than just make up rules, it was suggested informally by some that we should just use an existing set of rules by going with something like ANSI.

I later learned there had been the beginnings of a process to create an ISO standard for Lisp, so in that light I suppose that involving ANSI was something of a defensive action. My understanding of the significance of the ISO issue came after-the-fact, but I am told it was key at this point in time in the decision to involve ANSI.

When X3J13 first convened, representatives of ANSI or CBEMA (the Computers and Business Equipment Manufacturers Association, which later became NCITS) showed up to give us advice about how to behave as members of a standards body.

They seemed to say that the primary business they were in was to “not be sued,” and that their secondary business, if they could do it without being sued, was to make standards.

They advised us to be careful about public statements of various kinds that committee members might feel inclined to make, but that might lead to legal trouble.

It was a sobering way to suddenly realize that the process matters had changed.

Never having been part of a formal standards process, I didn’t quite know what to expect. The very fact that there are a lot of rules was very daunting and confusing.

Work was divided up. Committees were assigned to work on various subtasks.

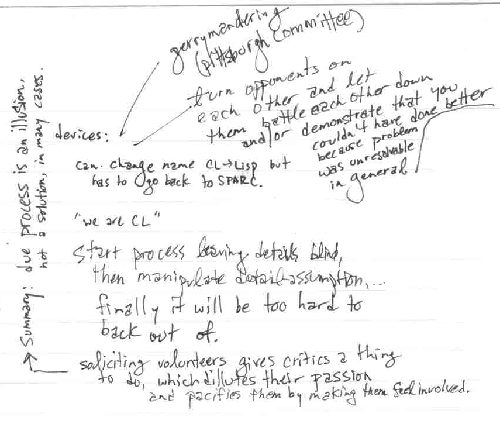

In researching this talk, I ran across notes about such division of labor that I had scribbled during an early meeting. It primarily illustrates how, in my youth, I was struggling to understand the organizational mechanisms at work. Among other things on the page I had scribbled the following phrases:

Lest someone find my handwriting illegible, the notes include these remarks:

“due process is an illusion”

“gerrymandering (Pittsburgh committee)”

“turn opponents on each other and let them battle each other down and/or demonstrate that you couldn’t have done better because problem was unreasonable in general.”

“soliciting volunteers gives critics a thing to do, which dilutes their passion and pacifies them by making them feel involved.”

“start process leaving details blind, then manipulate detail assumption, finally it will be too hard to back out of.”

I don’t know if these things really aptly described what was actually going on. They may have been, in some cases. They are just the personal guesses of someone who was new to the process and struggling to understand it. But I think it’s fair to say that early in the standards process there was a lot of tactical posturing between the committees.

It’s equally reasonable to note that while inter-corporate tactical posturing may have appeared to serve the individual vendors represented, it probably kept the vendors from cooperating in ways that later turned out to be essential. Before the process could move forward, a new understanding would have to be reached where we started to work more together, and less at odds with one another.

Ultimately, various committees were formed to work on various aspects of the standard, reviewing the relevant aspects of the language and determining whether changes or additions needed to be made. These committees included ones named Charter, Compiler, Conditions, Conformance, Namespaces, Iteration, and Objects. In addition there was a catch-all committee called Cleanup that handled small matters that didn’t seem to fit in any other committee.

All of these committees probably had stories worthy of note, but I will mention only a subset of them for reasons of space.

Sitting in a room for a good part of a meeting coming up with words to write as part of our mission did not seem like a good use of time to me at that moment. But I went along with it because there seemed no stopping it. In retrospect, I consider this a major administrative contribution and I credit the committee chair, Susan Ennis, for getting us to do it.

What I found later was that there were many times during work on the standard where people disagreed about what the right way to proceed was. In many of those cases, we might have been hopelessly deadlocked, each wanting to pursue a different agenda, but I was able instead to point to the charter and say, “No, we agreed that this is how we’d resolve things like this.”

Without a doubt, the most useful sentence in the charter was the one that said, “Aesthetic considerations shall also be weighed, but as subordinate criteria” [J13SD05]. Our goal in writing the charter had been to produce an industrial-strength language, and the time spent writing that one sentence, emphasizing the importance of pragmatics over abstract concerns about elegance, broke a lot of deadlocks. It’s not that any of us wanted the language to be unaesthetic. But in practice, given the compatibility constraints we had going into the project, there were a lot of details of the language that were simply fixed givens, and had we been obliged to fix all of those to the level of detail some were wishing for, it’s likely the process would never have terminated.

The charter also identified which projects were in scope and out of scope for our work, and which were required features and which were optional.

The time spent writing the charter later paid for itself many times over and it’s an exercise I recommend to any committee engaged in any large endeavor over a period of time.

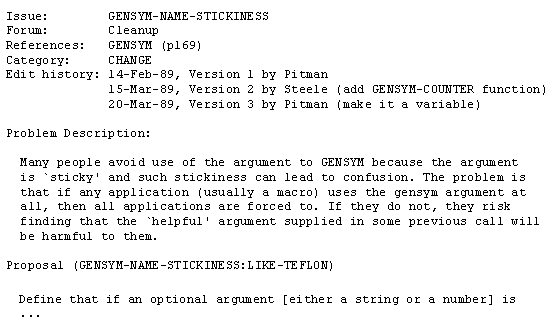

Most people who have seen the Common Lisp HyperSpecTM [Pitman96] have spent at least some time browsing the X3J13 issues section, in which the nature of various issues considered in the design of the language are recorded for history. The forms used were insisted upon by Larry Masinter. Once again, I (and perhaps others) worried that having to fill out forms was just a lot of pointless make-work. However, it quickly became apparent that he was right in advocating this approach.

Using forms with standardized fields like “problem description” and “proposal” where each proposal had to be analyzed for “cost to users,” “cost to implementers,” etc. led those submitting changes to consider their proposal from all sides before making a suggestion. It also made it easy for reviewers to determine which proposed changes were adequately explained and which were controversial.

It had an additional benefit that is a little more subtle. There was implicitly a kind of philosophy of how contributions from collaborating individuals were merged using these forms. For example, a good problem description had to satisfy everyone. If two people saw a problem from a different point of view, both people’s points of view were merged into the problem description, making the problem more complicated, and making solutions sometimes harder to achieve. But this was essential to addressing porting problems, for example. One couldn’t just solve how a file system operation would work on one operating system unless the solution was going to work on other operating systems, too. On the other hand, the “proposal” field was very different. If two people disagreed on a proposal, they each wrote their own proposal so that proposals could be internally consistent and coherent. This meant that a single problem often had several proposed solutions with different costs and benefits, and the committee had to decide which was the stronger proposal.

The procedure worked well and solved hundreds of small issues that came up. But it was not a property of the ANSI process that we used this procedure. It was unique to Masinter’s way of doing things. This was just one of many details of the process that was greatly affected by the presence of and style of a single individual.

The project was far too complicated to be completed by the committee itself. It required some sort of outside investment or it would never be done.

In 1987, Gary Brown, of Digital Equipment Corporation (DEC), arranged for the services of Kathy Chapman to be made available to the committee. Kathy became the first Project Editor and did some very important foundational work.

CLTL had been accepted as a “base document.” Under Kathy’s supervision, and with Steele’s explicit approval, CLTL was reorganized from its tutorial style to a dictionary style, working out many of the book design details that would carry through into the final work.

Within the code, she kept meticulous back-pointers to the source location of each of the bits of moved text so that it was later possible to track down the origin of passages that surprised people.

Kathy also used markup internally that distinguished the selection of an appropriate font for a function name or a variable name even though the fonts would turn out to be the same in the printed text. Although this practice had no effect on the printed text, however, it did have a subtle effect later because the TEX to HTML processor used to produce the Common Lisp HyperSpec would be able to rely on that information to create better cross-reference links.

At this time also, work began by an ISO committee designated as SC22/WG16, an international standards body concerned with Lisp. (Later, at the request of John McCarthy, Lisp’s creator, this body would clarify that its goal was to produce a dialect named ISLISP, and not to produce a standard for all Lisp. In fact, in researching this paper I found records from an early meeting of X3J13 stating that McCarthy had made a similar request there, too—that the American standard be one for Common Lisp, not for Lisp.)

Participants in the international standard included representatives from various Lisp communities worldwide, including Common Lisp, Eulisp, Le Lisp, and Scheme.

The first meeting was in Paris in 1988 and was co-located with International Workshop on Lisp Evolution and Standardization (IWOLES). Richard Gabriel was designated by X3J13 as the United States’ representative to this committee. I also attended.

In 1989, Digital Equipment Corporation (DEC), having already invested a substantial amount in hosting a Project Editor, decided it wanted someone else to take over the role. There was a call for a new Project Editor.

I volunteered—without first talking to my employer, Symbolics—with a few conditions. One condition was that funding could be found. Another was that I not have to write any status reports. I wanted to spend all my time writing. I told the committee it was fine if they sent people to peek into my office and see if I was working, but that I didn’t want to have the task be high overhead. I told them that if they could get a better offer, they should take it.

I wish I had recorded Richard Gabriel’s response to my offer because it was classic. He said something tepid like “I think Kent is the minimal acceptable kind of person to get this done.” I’m not sure by what standard he said this. But I came to believe that he was wrong. Perhaps he was focused on my technical or writing capabilities. I wasn’t the strongest technical person nor the best writer. But the job didn’t turn out to need that.

What it needed was someone who had a mix of skills, and I had a reasonably good mix. It needed me to be a technical person one day and a writer on other days.

Also, if it was going to involve someone with technical skills, it needed that person to be someone who was able to separate partisan technical advocacy (which could be done at meetings) from neutral editorial action (while editing the document). There was a lot of text changing, and it needed to change in purely editorial ways. Had I confused my being allowed to edit the document for editorial reasons with my being allowed to edit the document for technical reasons, the community would have lost faith in me. They needed to believe that I would work hard to make sure that the only changes made to the standard were those consistent with technical votes taken in the committee.

Editing, I found, is really mostly about trust.

The process dragged on. AI winter was taking hold. There was a sense that companies that wanted to make or use Common Lisp could start falling by the wayside if a usable standard was not produced soon.

Although Franz Inc. was a business competitor with my employer at the time, Symbolics Inc., the relationship between the various Lisp vendors had improved and it was becoming clear to all that they had a common “enemy”: C++.

Because of this, an unusual thing happened: Franz Inc. set about locating funding for the editing work I was to do while at Symbolics. Thanks to efforts by Hanoch Eiron, Fritz Kunze and Gene Kromer, and perhaps others at Franz Inc., funding contracts were created, and various different companies were lined up to each reimburse Symbolics for a few months of my work at a time. Those companies were Franz, Harlequin, Apple, and Lucid. (And for at least some of the time, Symbolics itself paid my salary, although they did not write a contract with themselves.)

The contract really didn’t promise much. It mostly just said that I would do some editing work and that someone would pay me. This frustrated the lawyers at most of the companies that ended up signing up, causing them to contact us and complain that the contract really had “no deliverable,” which was true. It was too complicated to figure out what to promise and so we left it vague. In each case, someone at the relevant company intervened, telling their lawyers not to worry about it. The contract got signed, sometimes with minor wordsmithing, and the work proceeded.

The resulting work product was intended to be placed in the public domain. There was later some concern that this had not been done effectively, however, since the resulting document lacked a proper notice to that effect. By the time this issue was raised, Symbolics as an entity did not exist. Nevertheless, the contract clearly intended that the resulting document be placed into the public domain. And for the most part, it has seemed to me that the community has consistently functioned as if that is what happened. For more than a dozen years (at the time of writing this paper), and in particular since the time each draft for circulation was created, the source files to the various drafts have been downloadable by anonymous FTP [Xerox92].

Importantly, there was no sense in which the work to create these was contracted by X3J13 itself. The job of the committee was not to decide wording of the final spec, but rather to decide truth. Since copyright is about control of the form of an expression, and not the content, X3J13 had not involved itself as an author, nor had it paid for the work. This would matter later.

Under some pressure from Digital Press to produce a new edition of CLTL, Steele finally complied in 1990 by publishing Common Lisp: The Language, Second Edition [Steele90], sometimes also known as CLTL2. With that act, the landscape of Lisp language descriptions got a lot more complicated.

The original book, Common Lisp: The Language, was the result of committee discussion. The content, quality, and timing of the original book was subject to committee control. Not so for this new work. Although Steele informed the committee of his plan to do the work, he did not really allow the committee strong control of whether the work was to occur, what would go into it, or whether the result was suitable. Some thought it was a good idea to do it, some thought not. But in the end it was both Steele’s right and his private decision to go ahead and publish the book.

Admittedly, the original Common Lisp committee had no specific standing as a standards body, but then again, in 1984 at the time of the publication of CLTL, investment in implementing and using the language was less, so it didn’t matter as much. After Common Lisp started to be used more heavily, it started to really matter who had standing to speak about the language because users and vendors needed to be able to make plans about which changes would and would not become available in implementations and because portability among implementations was a hot topic.

The automatic assumption by many, because it was from the same author and had a similar title, was that the Second Edition had the same formal standing as the first. It did not.

Steele wrote in his “Preface SECOND EDITION”:

I wish to be very clear: this book is not an official document of X3J13, though it is based on publicly available material produced by X3J13. In no way does this book constitute a definitive description of the forthcoming ANSI standard. The committee’s decision have been remarkably stable (it has rescinded earlier decisions only two or three times), and I do not expect radical changes in direction. Nevertheless, it is quite probable that the draft standard will be substantively revised in response to editorial review or public comment. I have therefore reported here on the actions of X3J13 not to inscribe them in stone, but to make clear how the language of the first edition is likely to change. I have tried to be careful in my wording to avoid saying “the language has been changed” and to state simply that “X3J13 voted at such-and-so time to make the following change.”

Notwithstanding Steele’s caveats, the book filled an important gap in a market because the original book was missing functionality so critical that useful programs were difficult to write. So vendors were pressured into implementing what it said for want of something better.

This was something of a practical problem because the book had been more or less a snapshot of ongoing work at a time that was unsynchronized with committee work, with no real attention paid to making sure that the particular time of that snapshot was one that the community would want to stand by.

In fact, some of the functionality that had been already approved when Steele’s book went to press was later rescinded. In theory, that was not an issue since the committee had published no standard. The features in question were not part of the original CLTL from which X3J13 had begun, nor would they appear later in dpANS Common Lisp and ANSI Common Lisp. And yet, users sometimes felt these were “incompatible changes” that should have been managed differently and explained better.

An example was the so-called “environmental inquiry” functions (not the traditional UNIX getenv operation, but rather functions allowing macros to reflect on the state of the lexical environment at macro expansion time, including type declarations). The effort to do new design in a timeframe suitable for standardization was not converging quickly enough. Basically, this functionality was considered desirable by the committee but was removed for fear that it would become, to use Steele’s language, “inscribed in stone” before their bugs were fixed. And yet, because of Steele’s new book, they already were inscribed in stone, at least pending an actual ANSI standard.

Even at the time of CLTL2, there were definite benefits of the book’s availability. It probably held an ailing market together long enough to reach the conclusion of the standards process. But it was not without administrative pain as continuing standards work diverged from what CLTL2 had appeared to promise.

It’s interesting to note the power that Steele’s book had on the design process. I recall a specific situation in a Common Lisp meeting in which a vote was being taken on renaming the macro named define-setf-method. Some Common Lisp users had been confused by the macro’s name into thinking it would define CLOS methods named setf, which was not the case at all. During a discussion of renaming define-setf-method to be define-setf-expander, a concern was raised by a committee member that this would be an incompatible change and he would no longer be able to use CLTL2 as a reference.

I was astonished by this claim and countered that we had already voted a number of semantic changes to the language that were incompatible with CLTL2. The reply only further astonished us. There is no formal record of this discussion, so I can only paraphrase from memory: “Yes, but those changes didn’t change the names of the functions. As long as the names are the same, I can keep using the old document.” Steele chimed in at this point saying that if it took changing some names in order to make sure people didn’t mistakenly think that ANSI CL had the same semantics as CLTL2, that was enough reason to vote for the name change all by itself. The name change was approved.

At the last minute, X3J13 received advice about international character sets from the Japan Electronic Industry Development Association (JEIDA) Common Lisp committee. In addition to a rationale document, “JEIDA Nihongo Common Lisp Guideline,” authored by Masayuki Ida and Takumi Doi, a specific proposal in the ordinary X3J13 issue format was provided by Masayuki Ida. After some simplifications were made, the proposal for issue EXTERNAL-FORMAT-FOR-EVERY-FILE-CONNECTION was voted on at the April 1, 1992 meeting and ultimately accepted.

In March 1992, a draft was ready for public review. This raised the level of the document to a draft proposed American National Standard, sometimes called dpANS Common Lisp.

dpANS Common Lisp was made available for Public Review, a somewhat lengthy process in which members of the public are allowed to make technical comments on the standard. The process requires that the committee give individual attention to each comment, although it does not require that action be taken. The committee must either make the proposed change or state its reason for not making the change.

In 1992, Symbolics Inc. was doing poorly financially because it was continuing to sell hardware and its computers were not hitting the price point demanded by the market. A last-minute effort was underway to produce a software-only emulator of the architecture, but I was not part of that effort. My work on standards was just a distraction to the ailing company, so it was not very surprising that I became a casualty of another in a series of layoffs that had been regular features of the struggling firm for quite some time.

Upon hearing that I was laid off, I sent out email from Symbolics to the other participants in the standards process letting them know that I had been laid off. The next morning, I was awakened at 8 a.m. by a phone call from Harlequin suggesting that I come work for them and continue my work. I met with Jo Marks, CEO of Harlequin. He very graciously understood my reasoning for wanting not to make an instant decision and told me that the offer would remain open for a while so that I could explore my options. (Ultimately, I did sign on board with Harlequin.)

During the time that I was not employed, though, I continued work on the standard. Also, there was a meeting of WG16, the ISLISP committee, scheduled for that time which I had agreed to host. We convened the meeting at the Royal Sonesta hotel in Cambridge, but there were so few attendees that we packed up and took the meeting to my house instead.

One morning, I woke up quite early and decided to write an emulation of the ISLISP language in Symbolics Common Lisp. I found this to be generally easy to do, except for a couple of small problems. LAMBDA was required to operate in the SCHEME style in ISLISP, without a surrounding FUNCTION special form, but CL did not support this. Also, ISLISP had global lexical variables and a different semantics for DEFCONSTANT. I took notes on these problems and wrote feedback on the standard to be submitted as Public Review comments on behalf of the ISLISP community. These comments later led to the availability of LAMBDA as a macro form in ANSI Common Lisp, as well as to the presence of the DEFINE-SYMBOL-MACRO operator.

As a matter of history, there were various aspects of Public Review that were interesting. To tell them all would take more space than I have.

One issue of note was that during the public review a controversy arose over my having chosen the term “generalized boolean” as separate from “boolean.” This was not a technical change but an editorial one, and it was made for clarity. Various functions were noted as returning generalized booleans, and an objection was raised by a commenter. It was a great surprise to people to learn that it was actually Steele who had made the technical change in the original CLTL, and that all I had done with the emerging standard was to make his writing sufficiently clear so that it could be noticed by people. By this metric, I judged, the change in terminology had been a success. The objection was resolved by providing an explanation, making no technical change to the emerging standard.

In 1994, the standard was approved for publication.

Subject matter expertise and writing skill were important factors for any success as an editor, but I think that in the end neither of these was the most important.

With a document this large and a time span this long, it was necessary that the community have complete trust that the only reason a change might be made to the meaning of the language was if there was a corresponding technical change voted by the committee.

From time to time, people would request small changes that they insisted were mostly editorial, and I had to resist the urge to comply with such requests even though the suggestions often did seem like good ideas from a technical point of view.

And yet, most of the time there was a great deal of editing going on. It was supposed to make the document read better but still preserve the old meaning. So, for example, if a certain aspect of the text of CLTL was meaningless or ambiguous or useless because of how it was written, my job as editor was to make the text clear enough that the meaningless, ambiguous, or useless nature was more transparent. I would sometimes say the job was to transform text from “implicitly vague” to “explicitly vague.” The job was definitely not to make up meaning out of nowhere.

So, in retrospect, I would reiterate that the primary qualification for Editorship was trustworthiness—particularly, the ability to resist the urge to “meddle” in technical matters while acting in the role of “neutral editor.”

Although approved in 1994, it took almost a full extra year to get this large document printed. Various things still had to happen. It would not be until 1995 that the document would be published.

ANSI was very concerned about a number of small typographical details and needed changes made to the book to have the standard conform to their needs.

In the process, I slightly modified the book design to make better use of vertical space. The drafts until that point had been taking about 1350 printed pages, but by cleverly contracting vertical whitespace in very small ways, I was able to save about 200 pages in the final work. The ANSI Common Lisp specification is only about 1150 pages, but contains pretty much the same core text as the 1350 page drafts; it just takes up less space.

I was employed by Harlequin, telecommuting from my home, while finalizing the standard. My liaison at ANSI had contacted me about some small edits they wanted made prior to formal publication of the already-approved standard, primarily changes to the look of headers and the fact that a bunch of page numbers were wrong in the appendix.

Oh, and they wanted us to give them the copyright.

A discussion ensued in which the following suggestions were made. It might be that I made a more contemporaneous record of the conversation, but I have thus far been unable to locate that record, so this reconstruction of the conversation is from memory and may be wrong in minor details. Also, I will use quotation marks here in order to make the presentation more readable, but those marks should not be construed to imply precise quotation, merely to imply the notion of someone speaking to someone else. I think this captures the essential character of what happened.

“I can’t do that,” I explained by phone one day, remembering my problems with MIT and copyrights from years before. “My work has been done under contract with a great many people who have paid for the work to be done, on condition that it be placed into the public domain.”

The person I was talking to alleged that standards are owned by the committee that makes them. This flew in the face of my understanding of how copyright worked. I thought copyright was owned by the author or, when the author was paid to do the writing, by the entity that had paid the author.

A court case was cited that sounded dubious to me. Since it was a phone call, I have no written record of the precise case. My vague recollection was that it was a regional case, nothing that had gone to the Supreme Court. Allegedly, the case created some precedent for the notion that if a committee writes something as a group, the committee holds copyright in the result.

“Oh,” I explained, “we didn’t do it that way. Our committee only voted on the truth of things, not on the text.”

Having previously done a great deal of reading on the issue of copyright, I knew that the US Code for copyright [USC17] is rather specific on this point. The code pretty clearly says that “Copyright protection subsists [...] in original works of authorship fixed in any tangible medium of expression” and goes on to say, “In no case does copyright protection for an original work extend to any idea, procedure, process, system, method of operation, concept, principle, or discovery, regardless of the form in which it is described, explained, illustrated, or embodied in such work.” In other words, copyright protects the form of an idea but not the idea itself. And since X3J13 had concerned itself with the creation of documents about truth, not with the creation of documents telling me what to write, X3J13 as a group had specifically and intentionally elected not to be an author of the resulting document.

“Copyright,” I explained, “covers the form of text, not the truth. So it doesn’t seem likely that such a court precedent would apply.”

“Also,” I continued, “X3J13 isn’t a legal entity. So we don’t think it could own anything. That’s why we wrote contracts among ourselves to accomplish the funding of the project, not contracts with the committee.”

“And anyway,” I went on, “the cash flow is in the wrong direction. You [ANSI] are taking in money for X3J13 fees. You’re not paying us, we’re paying you. Maybe if you were paying us, you might own our work. But we’re paying you for membership. Moreover, we’ve paid about $425,000 in enumerable expenses for salary of people who worked on making the standard. We bought the creation of the standard and we’re not giving it away to ANSI.”

“We, as a community, expect to continue to own the spec. My understanding is that copyright typically resides with whoever pays for the creation, so I doubt that could be ANSI. My recollection is that I told them I was surprised that they could have built their business on such a strange foundation—the strength of a single court case.

“We could just decide to go ahead and make the changes ourselves,” I was told. “Oh,” I explained, “that wouldn’t be good. Our names are on the document, you wouldn’t want to be publishing something we didn’t write under our name. That sounds like it could be fraud or something.”

“Well, we could use what you’ve already given us.” “Gee, I said, it has all those headers you don’t like and that index with all the wrong page numbers. I doubt you’d want that.”

“Well, we could just retract the standard. It’s not published yet. We could just not publish it. Oh, I said, that would be very helpful to us because Steele went and published CLTL2 and it’s created a lot of confusion in the market. We’d be happy to just use that, since that’s what everyone is implementing right now anyway.”

“So what do you suggest?” they finally asked.

“Well,” I suggested, “I’m not a lawyer, but my guess is that if we gave you something without giving you the explicit copyright and you just wrote your own copyright on it, no one would question that. I can’t promise you that, of course, but it seems like your best option. You could probably take a page from the way West Law does US court cases and copyright the headers and the page breaks, not the underlying text, and then say that although the main document is not yours, the official copy, the one that is the standard, is the one with all those headers of yours, and that can only be obtained from you.”

“But, bottom line,” I explained, “I’m not authorized to sign over any copyright to you and I’m not going to do that.”

In 1993, the Mosaic web browser was released to the world by NCSA, although I personally mark the birth of the web in 1994. I saw the Shoemaker-Levy comet impact on the web and then a little while later on television and it suddenly struck me that if the Internet could get me information faster than the news, the world would never be the same.

I had been working on the creation of a hypertext version of ANSI Common Lisp using the proprietary notation that Harlequin used for its Lisp documentation. It struck me that it might be useful to construct an HTML back end instead, in order to be able to browse the documentation in Mosaic. It was an easy change to make and pretty soon the first version of the Common Lisp HyperSpec was available for internal use at Harlequin. It was an instant hit, but was kept confidential while we discussed how to present it to the world.

I demonstrated it to Jo Marks asking if I could put it on the web. He was appreciative of its size but had an intense concern about equity and fairness among the parts of Harlequin and seemed concerned that the other divisions of the company had nothing similarly flashy to offer and that it might be perceived as some form of favoritism.

I was greatly saddened by this initial reluctance. The document was about 16MB and contained 105,000 links (what I sometimes referred to as 105 “kilohyperlinks”). There was no way to know for sure, but it seemed to me that it might be one of the biggest documents on the web at that point, and that it would draw attention not only to LispWorks as a product but to the possibilities of the web and of Lisp just because of how it was generated. But it was not to be. At least not yet.

In 1995, Guy Steele released a webbed version of Common Lisp: The Language, Second Edition.

Most of the world was thrilled by this. I was crushed. Harlequin had sufficiently delayed the release of CLHS that I was quite sure it would never be published. At best, my effort would seem like a last-minute afterthought inspired by Steele’s work.

I pleaded with Harlequin not to let my work waste away without ever seeing the light of day. It seemed to me that what should be an important life achievement was going to languish in some metaphorical basement, never to be seen by the world. Or, even if it was seen, I feared it would be perceived as uninteresting.

I continued to press for the release of CLHS.

An unresolved issue was how it should manifest from a commercial and legal standpoint, even if it were approved for release. Gaining appropriate approvals relied on getting this sorted out since the nature of the approval was quite different in the various cases. I had suggested that there were two primary ways of proceeding: bundling the documentation with the LispWorks product or releasing it to the web detached from the product.

Over time, I continued to urge various different uses for the new tool. One theory I offered was that it might be bundled as documentation into the LispWorks product, giving the product a unique bit of value not matched by competing Lisp products.

But the idea of doing something on the web was growing in popularity as people had come to understand the significance of the web better. A competing theory, also my own idea, was that CLHS should be what I then described as an “advertising virus.” By “virus” I didn’t mean anything sinister. I meant only that the document would be copied from place to place by people wanting to read and use it. Harlequin’s corporate logo was firmly affixed to it by copyright requirements, so would be copied along with it.

Harlequin was initially concerned that if we allowed users to download the document for their own use, the company would not be able to track the number of “hits.” Statistics-taking was everything then because no one understood what the web was doing, and we were all trying to figure out what its uses were, based on understanding usage patterns. So the idea of allowing a substantively interesting usage pattern to happen without being measured was odd.

In spite of this, I convinced them that it was more important that the document reach everyone than that we be able to count how many people it reached. Eventually this argument prevailed. Harlequin and LispWorks were, at the time, not as well known as “the big three” Lisp vendors (Symbolics, Lucid, and Franz). I alleged that my plan would put Harlequin and LispWorks on the map. The kind of license I wanted to make involved a somewhat unfamiliar paradigm for Harlequin’s legal team, but they worked with me to cobble together the right wording for a license—I sketched out what I wanted and they filled in the details.

I also resisted strong pressure to add features in the document to take advantage of this or that HTTP service, perhaps providing search capabilities or other active features. The index and cross-references were carefully designed to give functionality without requiring special HTTP support so that the document was made of pure HTML and could run anywhere that an HTML document could exist.

Finally, in 1996, well over a year after the original creation of version 1 of its creation, the Common Lisp HyperSpec made its debut on the web stage. To my great relief, it was not just seen as a knock-off of CLTL2. The fact that the webbed version of CLTL2 was technologically easier to produce because CLTL2 was written in LaTeX, while CLTL and the ANSI Common Lisp specification were written in raw TeX was a subtlety that went by most users. Its heavily cross-indexed nature, large glossary, and dictionary-style organization were substantive features that helped to overcome its lack of tutorial presentation order for some readers. The graphics were carefully kept to simple two-color buttons to minimize download times on slow connections.

It is sometimes easy to indulge the illusion that activities like language design and standardization are magically governed by the same kinds of rule-based orderliness that we like to apply to the collateral manipulated by such activities, such as programs and programming languages. Yet, as with many collaborative human endeavors, chaos, chance and luck all played their part in shaping the outcome.

I have offered more details about my role than about the roles of some others in this process, not because my role was more important than that of a dozen or two other key players, but merely because I have more detailed knowledge of my personal journey than I do about the journeys of others. It is my hope that by telling about my own participation, I have managed to make some broader points than merely “look at all I did.” I have tried to offer hints about where and how the paths of others have crossed with my own to produce a useful outcome, sometimes in spite of and sometimes because of accidental characteristics of individuals—their identities, their histories and skills, and even their preferences and phobias.

Susan Ennis was only present early in the process, but made an important contribution by focusing us on a good charter at a critical time. Gary Brown brought in Kathy Chapman as the first Project Editor, doing a lot of tedious text editing required to convert Guy Steele’s original work into dictionary format. Larry Masinter had a particular way he wanted to write the “cleanup issues” that turned out to simplify and organize the management of those issues in profound ways. The executive team at Franz Inc. took important steps to assure funding of the standard at a critical time. Jo Marks brought Harlequin into the picture to fill critical funding gaps when they came up, and then later funded the creation of the Common Lisp HyperSpec.

Gabriel’s remark that I was the minimal necessary person needed to finish the task, taken in hindsight, offers an opportunity for a slightly different perspective. That is, the requirements for the successful completion of the task were, I think, broader than we might have been focusing on. Had the group focused exclusively on getting someone who was a formal semantics expert, that person might have lacked the requisite writing skill. Had they sought someone with specific credentials in writing, there might have been some other absent capability. My background seemed to be what was needed to get the job done. Even my early misfortune with the copyright for my MACLISP manual informed my later dealings with ANSI about the copyright for the standard.

One might wish to regard the output document of a standard as formal or scientific in various ways, but my sense is that the process of making such a standard is not very scientific—certainly our process was not scientific. In general, chance and good fortune mattered. Small actions sometimes had profound effects. Cash flow mattered at various places in important ways, as did control of intellectual property.

The process was a messy one, but it went well. I hope that by candidly discussing some of the issues involved in the process, there is something to learn for other so that future such messy processes will nevertheless be successful as well.

I am grateful to Nancy Howard, who previewed various drafts of this document and offered a number of useful suggestions about spelling, grammar, and presentation. Under a great deal of time pressure because I waited to the last minute to allow him a chance to look at it, Richard Gabriel read a near-final draft and still managed to make several substantively useful comments on both my presentation and the historical detail.

I am also grateful to Pascal Costanza, Charlotte Herzeel, and Richard Gabriel for their administrative and logistical help in getting me to this conference to tell my story, and for their patience while I put together the written account to clarify some of the things I said, or intended to say, in the oral presentation.

[J13SD05]

X3J13. Purposes of X3J13 Committee,

X3J13 Standing Document X3J13/SD-05, 1997.

http://www.nhplace.com/kent/CL/x3j13-sd-05.html

[Moon83]

Moon, D., Weinreb, D.

Signaling and Handling Conditions,

Symbolics Inc., Cambridge, MA, 1983.

[Pitman83]

Pitman, K.M.,

The Revised Maclisp Manual.

Technical Report 295, MIT Laboratory for Computer Science,

Cambridge, MA, 1983.

http://www.maclisp.info/pitmanual/

[Pitman96]

Pitman, K. M.,

Common Lisp HyperSpec.

1996.

http://www.lispworks.com/documentation/HyperSpec/Front/

[Steele84] Steele, G.L. et al, Common Lisp: The Language. Digital Press, 1984.

[Steele90]

Steele, G.L. et al,

Common Lisp: The Language, Second Edition.

Digital Press, 1990.

http://www.cs.cmu.edu/Groups/AI/html/cltl/cltl2.html

[USC17]

US Code, Title 17, Chapter 1, Section 102.

http://www.law.cornell.edu/uscode/html/uscode17/usc_sup_01_17_10_1.html

[Xerox92]

X3J13 document repository

ftp://ftp.parc.xerox.com/pub/cl/

[Yates93]

Yates, J., Orlikowski, W.J., “Knee-jerk Anti-LOOPism and other E-mail Phenomena:

Oral, Written, and Electronic Patterns in Computer-Mediated Communication.”

Technical Report #150, Center for Coordination Science, Cambridge, MA, 1993.

http://ccs.mit.edu/papers/CCSWP150.html